We expect these technology trends to act as force multipliers of digital business and innovation over the next three to five years.

1. Robotic Process Automation (RPA)

RPA is an application of technology, governed by business logic and structured inputs, aimed at automating business processes. Using RPA tools, a company can configure software, or a “robot,” to capture and interpret applications for processing a transaction, manipulating data, triggering responses and communicating with other digital systems. RPA scenarios range from something as simple as generating an automatic response to an email to deploying thousands of bots, each programmed to automate jobs in an ERP system.

2. Edge Computing

Edge computing is a distributed computing framework that brings enterprise applications closer to data sources such as IoT devices or local edge servers. This proximity to data at its source can deliver strong business benefits, including faster insights, improved response times and better bandwidth availability.

The explosive growth and increasing computing power of IoT devices has resulted in unprecedented volumes of data. And data volumes will continue to grow as 5G networks increase the number of connected mobile devices.

In the past, the promise of cloud and AI was to automate and speed innovation by driving actionable insight from data. But the unprecedented scale and complexity of data that’s created by connected devices has outpaced network and infrastructure capabilities.

Sending all that device-generated data to a centralized data center or to the cloud causes bandwidth and latency issues. Edge computing offers a more efficient alternative; data is processed and analyzed closer to the point where it’s created. Because data does not traverse over a network to a cloud or data center to be processed, latency is significantly reduced. Edge computing — and mobile edge computing on 5G networks — enables faster and more comprehensive data analysis, creating the opportunity for deeper insights, faster response times and improved customer experiences.

SEE ALSO: How To Save Your Parking Spot On Google Maps

3. Quantum Computing

What is quantum?

The quantum in “quantum computing” refers to the quantum mechanics that the system uses to calculate outputs. In physics, a quantum is the smallest possible discrete unit of any physical property. It usually refers to properties of atomic or subatomic particles, such as electrons, neutrinos, and photons.

What is qubit?

A qubit is the basic unit of information in quantum computing. Qubits play a similar role in quantum computing as bits play in classical computing, but they behave very differently. Classical bits are binary and can hold only a position of 0 or 1, but qubits can hold a superposition of all possible states.

What is quantum computing?

Quantum computers harness the unique behavior of quantum physics—such as superposition, entanglement, and quantum interference—and apply it to computing. This introduces new concepts to traditional programming methods.

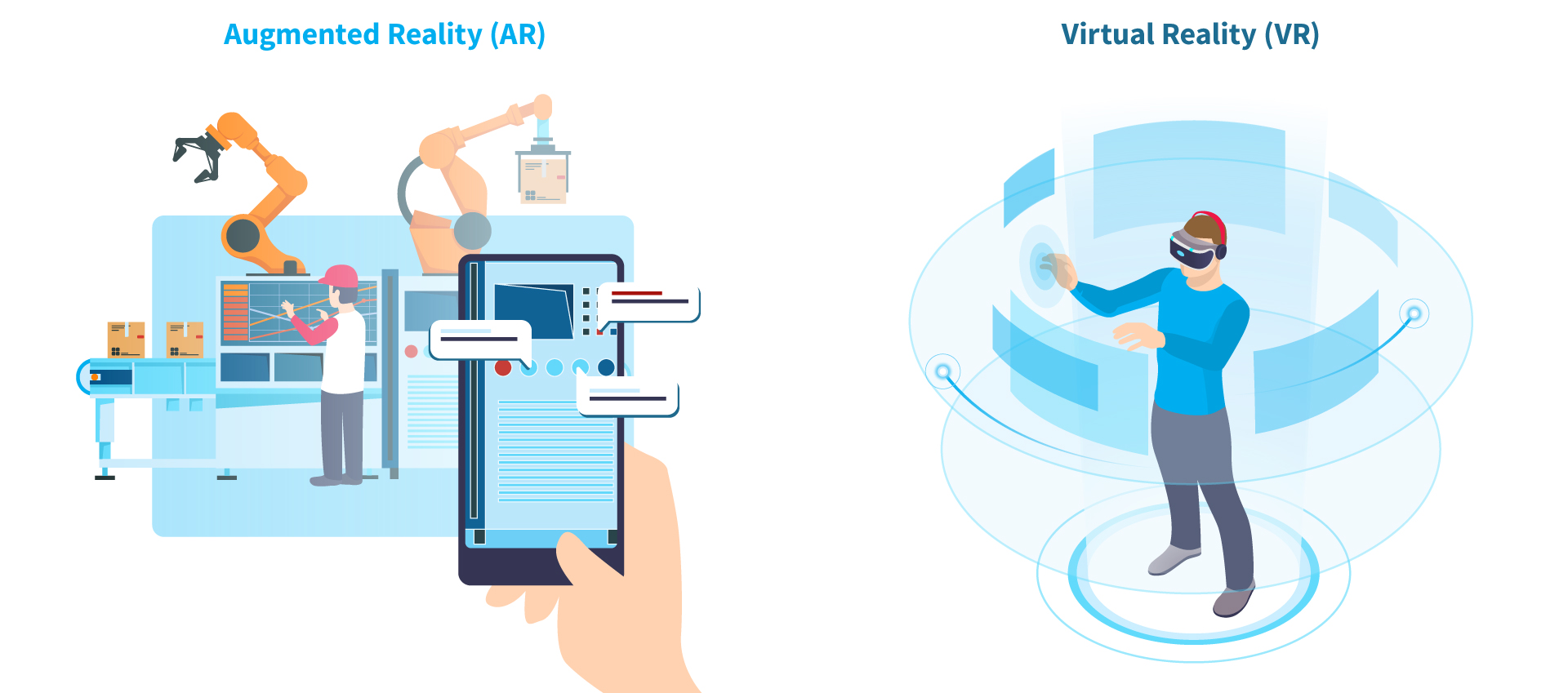

4. Virtual Reality and Augmented Reality

What Is AR?

Almost any person with a smartphone can get access to augmented reality, making it more efficient than VR as a branding and gaming tool. AR morphs the mundane, physical world into a colorful, visual one by projecting virtual pictures and characters through a phone’s camera or video viewer. Augmented reality is merely adding to the user’s real-life experience.

What Is VR?

Virtual reality takes these same components to another level by producing an entirely computer-generated simulation of an alternate world. These immersive simulations can create almost any visual or place imaginable for the player using special equipment such as computers, sensors, headsets, and gloves.

5. Artificial Intelligence (AI) and Machine Learning

Artificial Intelligence

Artificial intelligence is a field of computer science which makes a computer system that can mimic human intelligence. It is comprised of two words “Artificial” and “intelligence“, which means “a human-made thinking power.” Hence we can define it as,

Artificial intelligence is a technology using which we can create intelligent systems that can simulate human intelligence.

Machine learning

Machine learning is about extracting knowledge from the data. It can be defined as,

Machine learning is a subfield of artificial intelligence, which enables machines to learn from past data or experiences without being explicitly programmed.

Machine learning enables a computer system to make predictions or take some decisions using historical data without being explicitly programmed. Machine learning uses a massive amount of structured and semi-structured data so that a machine learning model can generate accurate result or give predictions based on that data.